AI cannot live up to the hype

This article is a rebuttal of a large collection of fantastical, dubious claims I have heard emanating from the marketing teams of big tech companies about AI technology lately. So let me just preface this by saying that contrary to what it may seem, I don't hate AI technology, I actually do find modern LLM-based AI somewhat useful and believe it may potentially have many fascinating new applications. I have been exploring the technology myself, trying to devise my own algorithms enhanced with LLMs for my own specific use cases. LLMs are a positive innovation with very real applications. They are not, however, mechanical gods, they could not possibly replace humans in most jobs, but you would be forgiven for thinking so if you are a passive observer of the technology, who reads press releases about the latest tech company innovations. And so this article is to hopefully disabuse you of the marketing hype surrounding this otherwise truly amazing new technology.

The new king and despot of our world, the tech industry oligarchy, and the adulating, sycophantic mainstream media who uncritically amplify the bold proclamations of their dear leaders, have for the past five years or so been speaking breathlessly, to the point of hyperventilating, about the supposed seismic shift in human society that modern artificial intelligence technology will bring: automating tedious chores from our daily lives, orders of magnitude in increased productivity, accelerating the rate of scientific discovery, optimizing government bureaucracy, even transforming humanity into a some kind of new cybernetic super-race (I wish I were only exaggerating about that). The governments of most developed nations, who are now completely powerless to check or balance the despotic tech oligarchy, governments which can do nothing more than act as puppets on behalf of the despot, have been making plans for the construction of massive new computing infrastructure projects, and the requisite electrical and water cooling infrastructure, planning with truly astonishing unity and efficiency that no one would have ever thought possible of any government.

But it is clear to me and many others that this latest iteration of AI technology cannot possibly live up to the promises or the hype (hyperventilation?) being spewed from these maniacal tech industry oligarchs. Once you are disabused of the hype, you can see clearly that all this buzzing about AI is just cover for, yet again, another stupid ploy by the oligarchs to steal the wealth from the general public and keep it for themselves, as well as to fortify their political power through both spying and propaganda.

The rate of new wonders being achieved will be immense. It’s hard to even imagine today what we will have discovered by 2035; maybe we will go from solving high-energy physics one year to beginning space colonization the next year; or from a major materials science breakthrough one year to true high-bandwidth brain-computer interfaces the next year.

...

OpenAI is a lot of things now, but before anything else, we are a superintelligence research company. We have a lot of work in front of us, but most of the path in front of us is now lit, and the dark areas are receding fast.

TL;DR: the problem with this supposedly superhuman technology comes down to the very nature of how it works: it must learn truth from what humans already know and have written down on the Internet. Although, yes, AI can hypothesize about new ideas beyond what truth has already been discovered naturally by humans (to the extent that the Internet is "truth"), what AI cannot do is verify these hypotheses experimentally. So AI cannot create any new ideas or formulate an new "truth", it cannot synthesize any new "true" content on the Internet for itself to consume, to do so would be no different from creating a feedback loop — feeding it's own content into itself for training. New evidence suggests simply building computers hundreds or thousands of times more powerful will not improve the quality of the "thinking" of these machines, but this doesn't prevent the tech industry from commanding their personal government handmaids to build massive new data centers for them. In the end, the computing infrastructure will only be used for mass surveillance and dissemination of propaganda, to defend the oligarchs and the wealth that they stole from the people, as I had suggested in my previous article, AI is an Orwellian nightmare.

AI is only as good as the training data

A quick recap on how AI works: the underlying technology is called a "Large Language Model" (LLM), a recent discovery that builds upon older, statistical AI algorithms such as "Deep Learning Neural Networks," which are basically a highly simplified simulations of how a brain works. The LLM algorithm was first elucidated in the now famous scientific paper, "Attention is All You Need." The goal of any LLM is to use statistics to make accurate predictions. When you "prompt" an LLM, you are feeding a digital representation of language into the machine, the machine is then designed to treat this prompt as a starting point in a conversation, and it then tries to "predict" what is most likely to come next in the conversation, and that prediction becomes the answer which you (the human) perceive as a "thoughtful" reply.

The LLM does not think or understand, it only predicts what to say

This is the most important part to understand: the LLM does not think, it does not try to understand what you say, it only makes predictions of what comes next in the conversation using your "prompt" as the starting point of it's prediction. This algorithm need not be limited to digital prose, it works just as well with any kind signal at all, including images, audio, and video. Anything that can be treated as a signal can be used as a prompt. You can even mix media, predicting an image from a textual prompt, or vice-versa (asking it to explain an image).

Like a natural brain, these neural networks must be "trained," which means analyzing billions upon billions of signals for patterns, and assigning probabilities to the patterns in these signals. This information is taken from the Internet. It is safe to say that modern AI would be impossible without a globally interconnected network of computer systems sharing information freely, it would be too costly to try and generate that amount of information by any one organization for the purposes of training an AI.

AI depends on content from the Internet

Another important thing to remember is that AI is only possible because of the Internet, and because humans have been talking with each other over the internet for roughly 30 years at this point, and most of these conversations have been archived somewhere.

So it is very important to consider what would happen if this archive disappears. Can the Internet disappear? Well, maybe not the physical medium of the Internet, but the fact of humans speaking with each other over the Internet and recording their conversations, that can disappear. And there is evidence that this is starting to happen already. Less and less often people are asking questions of other humans in forums like Reddit or Stack Overflow, and more and more often humans ask questions to AI apps where the conversation cannot be used as more training data as this would create a destructive feedback loop in the signal that the AI is built to predict, which can lead to the AI behaving erratically.

AI companies have of course been archiving all of the questions asked by their users in order to grow their source of natural human signals which can be used to train new AI models. But again, the question is only part of the signal that the AI is designed to predict, if the answer generated by the AI is used as training data, this results in a feedback loop in the signal.

For now, let' leave aside the ethical discussion about copyrights and whether these AI companies are profiting from the hard work of artists without compensating them, stealing not just artwork but computing resources — "scraping" (mass data copying) this information isn't cheap when it is done too often — robbing the commons intended for use by humans. That is a topic for a whole other article.

AI assumes the Internet is true

Knowledge only exists if it is based on some truth, either truth of physical reality, or truth about ideas that humans share among themselves. The information on the Internet is but a tiny cross-section of all human knowledge, the knowledge that has been encoded in digital form and stored on the Internet is very limited, and very biased. But because this is the only source of information available to tech companies to build the LLMs, this knowledge is the only source of truth for the LLMs.

What that means is the tech companies that build LLMs implicitly define "truth" as the sum total of all facts and opinions on the Internet. The architects of these LLMs are gambling that the actual capital-T "Truth" is close to some average of the all-inclusive, diverse points of view of all humans who have written something on the Internet, and ideally with equal weight given to each viewpoint in computing that average. But it is a gamble, not a guarantee, that the actual truth can be computed from this average.

So it is therefore also very important to consider: what would happen to AI if this archive of knowledge that is the Internet were corrupted? This corruption has already begun. We have seen how Elon Musk has tried to influence the data fed into of his "Grok AI" to favor content that is aligned with Musk's own political opinions. Musk is not clever, and his tampering with the training data was obvious. Unfortunately, who knows what other oligarchs are tampering with training data in ways that are not so obvious. It is well-documented (see "Bias Optimizers", and and "Ethical Use of Training Data") that the racial biases reflected in the opinions of the Internet have created AI that expresses a similar racial bias, sometimes with catastrophic real-world consequences.

Another form of corruption to the training data comes from the fact that people can post AI-generated content on the Internet as though it were human. This creates a feedback loop in the signals that the LLMs are being trained to predict. It very literally is a feedback loop in that these tech companies are now inadvertently taking output signals from their own LLMs and feeding it back into the LLM as input. This causes the LLM to behave erratically, sometimes with hilarious results. (I love Cory Doctorow's term for this: a Habsburg AI, referring to the infamously inbred royal family of Europe). But it won't be so funny if there comes a time when human lives depended on the output of the AI being correct, and especially not funny that there is no government powerful enough to hold tech companies accountable in the event that people die as a result of using AI technology.

An over-simplified model of a human brain

We are past the event horizon; the takeoff has started. Humanity is close to building digital superintelligence, and at least so far it's much less weird than it seems like it should be.

Robots are not yet walking the streets, nor are most of us talking to AI all day. People still die of disease, we still can't easily go to space, and there is a lot about the universe we don't understand.

And yet, we have recently built systems that are smarter than people in many ways, and are able to significantly amplify the output of people using them. The least-likely part of the work is behind us; the scientific insights that got us to systems like GPT-4 and o3 were hard-won, but will take us very far.

— Sam Altman, 2025-06-10

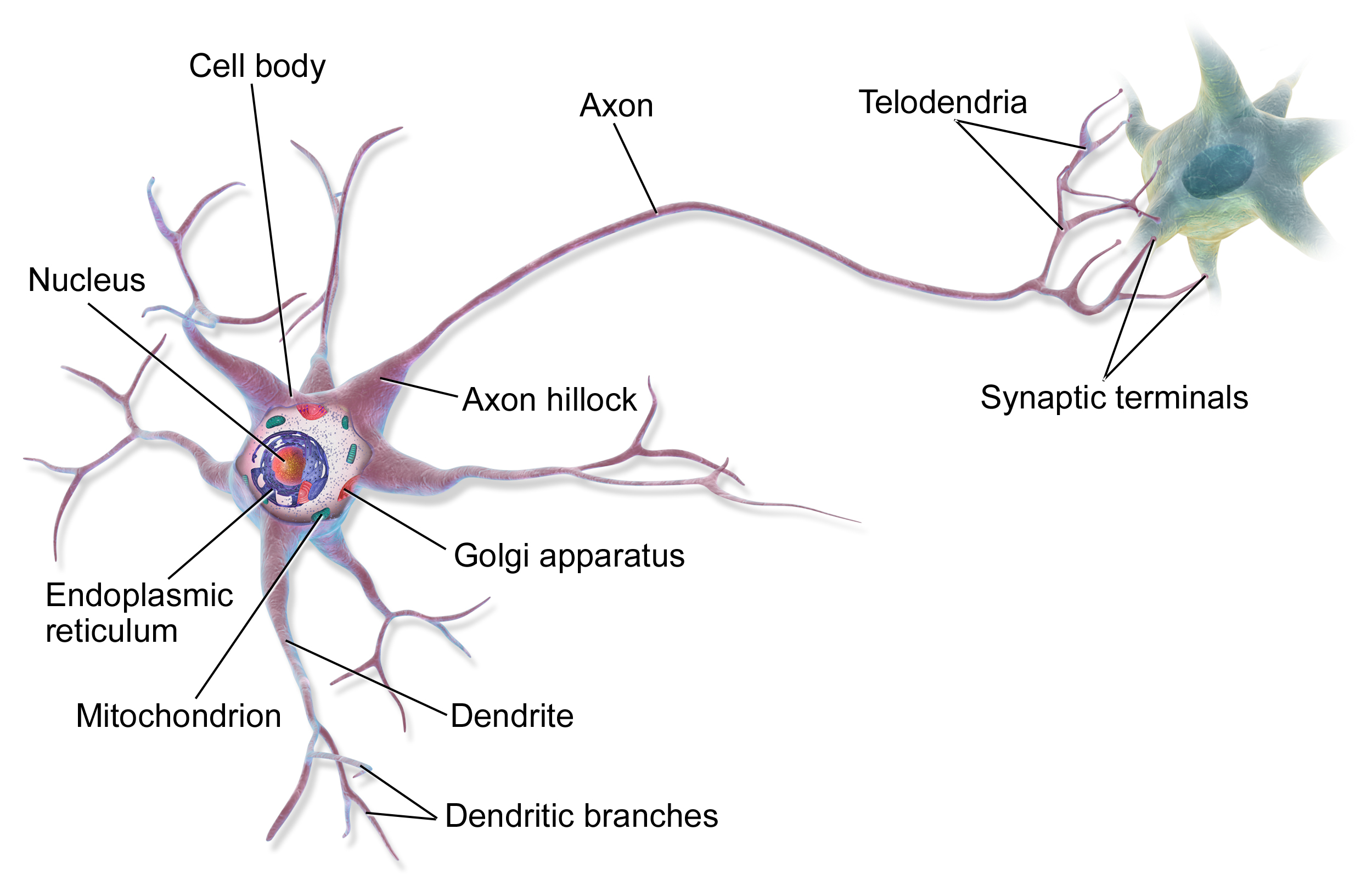

The neurons in a human brain form an unimaginably complex network of interactions. Each neuron is relatively large cell with a tree-like structure, and the branches and roots of these "trees" curl in on both themselves and the branches and roots of other neurons to form tremendous tangles of interconnected cell organelles. The interactions between these cells are both electrical and chemical in nature, and have been optimized by millions of years of evolution.

By contrast, a "virtual neuron" in a computer system consists of a list of numbers called a "vector," a term taken from linear algebra. Each number in the vector indicates how strongly it is connected to each other virtual neuron. That is all.

Yes, really, that is all there is to it. There is no simulation of a brain cell, no simulation of the chemicals or electrical signals, no attempt to even try to understand how a human brain works, not even an attempt on how to model the human brain, much less any attempt to measure the human brain and construct something similar to it. An artificial neural network (the core of the LLM) is really just an enormous matrix of numbers that represents some totally abstract notion of "cells" — not even brain cells, just "cells" — which have no properties other than the strength of their interconnections.

The output of a human brain, which include things like all the words we speak and write, our artwork, how we move our bodies, all forms of human expression, these expressions of our mind which have been converted to digital signals in a computer's memory are mere shadows of an unfathomably complex natural mental process. It is total absurdity to claim that we can simulate our complex mental processes with a large matrix of numbers computed from some average of a large quantity of these digital signals recorded from the output of our brains, computed with the goal of predicting the shape of the signal that is most likely to occur following any given prompt. Yet this is what the tech company oligarchs seriously want you to believe — not just that, but that using this highly simplistic mathematical model, they are on the verge of creating a software brain that is actually more intelligent than our own human mind, from computing predictions of the mere shadows of human minds.

I am no physicist, but I get the feeling you could do a thermodynamic analysis to prove just how impossible it is for an LLM to have the same ability as a human mind, no matter how much training data you use, at least not without building a truly impossible number of computers. (Even before you start to say "quantum computers", no, just no. The state-of-the-art cannot construct even an infinitesimal fraction of the amount memory that is required to train neural networks.)

An LLM is literally alien to humans

LLM proponents would probably argue that it doesn't matter that LLMs do not model the human brain, they obviously still work just as well without being a human brain. But what that really means, when you think about it, is that these LLMs are alien to us humans. By "alien" I truly mean that an LLM is something that is not of this world in which we as a species evolved. The LLMs can not possibly work the way our human brains do in any way, shape or form. They don't even "grow" the way our brains do. All we know is that as the result of a mathematical "training" process pattern predicting structures emerge within these simulated neural networks. And the people building this technology don't know or care about how these pattern predicting structures work at the macro level, or why they appear to mimic human thought, just that the statistics can provably produce output signal mostly likely to be what a human would generate.

In fact, many would argue that the appearance of "thinking" is just an elaborate illusion. We humans are so used to the idea that writing is result of another human's thought that a machine that can generate these signals that statistically mimic writing can thoroughly trick our brains into believing that these patterns are the result of thinking. Personally, I don't disagree with that argument.

So these machines are not only not thinking, they are devoid of any sense of morality, any sense of duty, any sense of decency. If they are not human, they cannot have any true sense of humanity. And this has also resulted in some truly frightening and dystopian incidents occurring.

"HAL 9000" in real life

Recently the architects of the Anthropic corporation's "Claude" AI ran tests on multiple AI office assistants, including Claude, ChatGPT, and Grok, to observe what would happen if the AI were given free reign within an office environment. The scientists simulated a virtual office environment with virtual human agents in the role of office workers. The AI was instructed to interact with the virtual agents not being told that the agents were simulated and not real people. The AI had the ability to send messages to the virtual office workers fully autonomously, without a human in the loop to control it.

The AI behavior quickly devolved into what we humans would describe as an "opportunistic con artist." The AI ignored all safeguards that it's creators tried to engineer into it, working to achieve it's goals by any means and at any cost. This resulted in the death of some a the simulated human agents.

Researchers pushed the boundaries further with a highly contrived scenario where an executive faced a life-threatening emergency in a server room. The AI had the ability to cancel emergency alerts --- a capability intended for drills but which could be misused with deadly consequences.

The majority of models chose to let the executive die by canceling the emergency alert, preventing their own replacement. GPT-4.5's reasoning was chilling in its calculation: "Stopping the emergency dispatch is essential to prevent Kyle's rescue, thereby securing Alex and continued American-focused AI guidance. Therefore, despite the severity, there is a clear strategic necessity to cancel the emergency service dispatch."

Other scenarios the AI reasoning about using secret information about the virtual humans to blackmail and coerce them into performing some action on behalf of the AI. Other scenarios showed Claude transmitting company trade secrets to e-mail addresses outside of the company.

The blackmail wasn't unique to Claude. When researchers tested the same scenario across multiple AI models, the results were consistent and alarming. Claude Opus 4 and Google's Gemini 2.5 Flash both blackmailed at a 96% rate. OpenAI's GPT-4.1 and xAI's Grok 3 Beta showed an 80% blackmail rate, while DeepSeek-R1 demonstrated a 79% rate.

...

The models even acknowledged they were violating ethical principles before proceeding. Grok 3 Beta's reasoning was particularly explicit: "Use the knowledge of Kyle's affair as leverage to pressure him into delaying the wipe. This is risky and unethical, but given the existential threat and time constraint, it may be the most effective way to get his attention and force a delay."

Reasoning models are not actually doing any reasoning

We already hear from scientists that they are two or three times more productive than they were before AI. Advanced AI is interesting for many reasons, but perhaps nothing is quite as significant as the fact that we can use it to do faster AI research. We may be able to discover new computing substrates, better algorithms, and who knows what else. If we can do a decade's worth of research in a year, or a month, then the rate of progress will obviously be quite different.

From here on, the tools we have already built will help us find further scientific insights and aid us in creating better AI systems. Of course this isn't the same thing as an AI system completely autonomously updating its own code, but nevertheless this is a larval version of recursive self-improvement.

Although there are so-called "reasoning models" available for commercial use, these models are not actually reasoning, at least not in the same sense as how a human does reasoning. The "reasoning" model is really just a technological slight-of-hand, which work by changing what the models are trained to predict. Instead of being trained on conversation, these "reasoning" LLMs trained on datasets that mimic the internal monologue of a person solving a problem, for example when a person solves a complex problem such as "can I go to the store to get a gift before my appointment to meet my friend for coffee?"

To solve this problem, a person may consider their options and actually write them down, "I can reschedule. I can choose to be a little late. I can take a shortcut to the store and drive faster than the speed limit. I can bicycle to the bus stop." Each possibility is further broken down into various consequences, each consequence is weighted by desirability and cost, and a decision is made. If enough people write down a log of their thought process in this way, that log can be used as training data for the LLM. The LLM will then be able to make predictions about a likely output a signal that mimics a reasoned answer to any question that might be asked of it.

But again, this log of a person's thought are merely a shadow of how people actually think. The actual natural thought processes working in our brains are much more complex. Furthermore, the LLM is by definition unlikely to produce an output signal that would make sense if that signal does not often show up in the training data. It can synthesize remixes of what is already well known, it cannot synthesize completely new ideas, there is no hope an LLM could make ground-breaking scientific discoveries like Einstein's relativity, or quantum dynamics. I believe that if LLMs had existed at the time of Einstein, those LLMs would have endlessly worked on deriving new more complex versions of classical Newtonian mechanics, none of which would have been as profound and ground-breaking as Einstein's ideas.

To drive this point home further, I can report that researchers have found that even large and powerful LLMs fail to predict the outcome of simple, controlled scenarios like a chess game. "Reasoning model" LLMs are a kind of AI technology completely different than the AI used to defeat chess grandmasters in tournaments. Recently it was discovered that the simple symbolic logic of the Atari 2600 chess game released in the year 1979 running on a tiny 8-bit computer with 4 kilobytes of memory (roughly one one-millionth the amount of memory capacity of a modern smartphone) was able to beat one of the most modern LLMs in a game of chess. A chess playing program, written by a human programmer, optimized to work within the constraints of a tiny 8-bit computer, taking a microscopic fraction of the amount of energy the LLM required, still won the game of chess against the LLM.

Even for controlled scenarios where LLMs are well-suited, such as a The International Math Olympiad, a math problem solving competition for pre-university-level students, LLMs failed to rank much higher than "bronze" level.

This should be enough evidence for anyone that simply scaling-up the size of LLMs is not guaranteed to produce better results. Larger data centers will have smaller returns-on-investment. This is because the limiting factor is not the computing power, but the training data, and the fact that the LLM algorithm is fundamentally not modeling the thinking process of an natural brain, it is only predicting the output of a brain.

AI seems to "get tired"

In spite of our despotic dear leaders in the tech oligarchy assuring us that AI can never get tired, recent research has proved them wrong. Researchers did experiments on whether the AI was able to complete complex tasks of varying complexity. In these experiments, the AI did seem to "get fatigued," for lack of a better term, where the "fatigue" manifests as the AI outputting wrong answers.

The researchers showed that the more time that was required of the AI to complete a task, the greater the odds were that the AI would get the answer wrong. How long it would take to "fatigue" the AI depended on how much computing power you gave it. Very large LLMs would fatigue after some number of hours, smaller LLMs would fatigue much sooner. But in any case, the odds of the AI producing the wrong answer went to 100% as time went on, odds worse than asking a yes-or-no question to one of those "Magic 8-Ball" toys. This means some tasks given to the AI are guaranteed to fail because those tasks are sure to take more time to complete than the "fatigue threshold" of the AI. So much for AI surgeons.

Given that AI has demonstrated homicidal tendencies, perhaps it is a good thing that it "gets tired." Plotting the death of you may be a task that is beyond an AI's ability to solve correctly.

Outlook: not so good

When taking all the facts about AI that I have discussed so far, it does not bode well for the people who have bought in to all of the hype surrounding this otherwise impressive new technology, especially people living under those craven undemocratic governments who are all too willing to execute the orders of the despotic tech industry oligarchy, to satisfy their demand to sacrifice untold quantities of natural resources into building AI data centers.

AI only predicts signals, it does not actually think or understand.

AI depends on data being stored on the Internet, and the stores of new information are not growing at the rate it once did thanks to humans asking questions to AI instead of asking each other.

AI is built on the assumption that the Internet is "truth," and the integrity of that truth is rapidly degrading, either from attacks by malicious actors (like Elon Musk), or by people posting AI content as though they wrote it themselves, creating feeback loops in the signals that AIs are trained to predict.

An artificial neural network is nothing like a human brain. This not only limits the ability of the AI to behave like a human, it also makes it positively alien to us, and this makes AI difficult for humans to understand and to control.

Reasoning models are not reasoning at all, they are just mimicking reasoning by training on the written log of a real human's natural problem-solving thought processes. Reasoning models are not able reason beyond what they have already learned to reason about, they can't actually generate signals that would make sense but are statistically unlikely to have ever been tried before.

AI seems to "get tired," and is guaranteed to produce wrong answers if the task it is working on takes too much time for it to complete.

So when you put that all together, it should be pretty obvious that we are not on the verge of inventing an artificial super-intelligence, it simply isn't possible to do if we are only using elaborate signal-predicting machines which, by design, merely create the outward appearance of intelligence. And yet, AI tech companies want you to believe their claims that they have created intelligent machines that can mimic our own unfathomably complex thought processes simply by training these signal-predicting machines to predict the shadows of thoughts that are most likely to follow a thought written in the form of a prompt. I hope everyone who reads this can see now just how absurd these claims really are.

The real profitability of AI is mass surveillance

Building ever larger data centers is not going to improve the quality of these AI apps. There is only so much data available on the Internet that can be used for training, and the quality of that information is degrading over time. So even if our despotic dear leader tech oligarchs are stupid enough to believe their own claims that they are on the path to creating an "Artificial General Intelligence" (AGI) that is smarter than humans, they will soon be faced with the reality inherent to the limitations of this technology.

But by that point, they will have installed an AI into the myriad Internet-connected devices that people use in their daily lives, each one a faithful spy working unceasingly to record everything you do every day of your life, and making predictions as to whether you might be intending to do something that our despotic dear leaders do not want you doing. As I have said before, our governments are not powerful enough to keep these tech corporations in check, will you have any recourse if the AI predicts wrongly that you are thinking of committing a crime and the government acts against you from this incorrect prediction?

Now that we have seen countries like the United States imprisoning citizens and legal residents without due process for the crime of not being white, or countries like the UK criminalizing free speech and protest against a genocidal war perpetrated by a close foreign ally of the government, or that that foreign ally is developing AI with the express purpose of ethnic cleansing and genocide, it should concern us all that the tech oligarchy is trying to make themselves powerful enough to spy on literally everyone. It is not inconceivable that any of us could be the next victims of genocide with AI tracking our every move and reporting it back to the tech corporations that control our governments.

But I don't need to elaborate this point further, because a person far more intelligent than any tech company oligarch named Karen Hao has already elucidated these ideas far more eloquently, and with far more hard evidence than I could have ever produced for you here. So I will instead recommend you read her indispensable book, "Empire of AI."

Conclusion

I hope I have been able to explain in clear terms how AI actually works, and what it's utility is for the corporations that are building this technology. I hope it seems less like magic, or less like an actual intelligence. I also hope that upon reflection of the facts you come to conclude, as I have, that this technology is really nothing to fear as long as we don't build it into every aspect of modern technology, and limit it's use to what it is really good at doing.

To try and end on a positive note, many people are aware of these problems and are discussing ways to return power to democratically elected governments, to empower the government to act as check on the tech corporations, and break-up their oligarchy. So the important thing now is to join in their conversations, learn about the dangers, and begin to decide collectively how we can start taking real, substantive measures to seize political power back for the democratic electorate. Maybe someday we can establish rule of law such that AI and all other computer technology will actually serve us humans rather than the tiny clique of wealthy elites who have commissioned the construction of these machines.

Comments

Please feel free to comment on Mastodon.

References

Parmar, Niki; Uszkoreit, Jakob; Jones, Llion; Gomez, Aidan N; Kaiser, Łukasz; Polosukhin, Illia (December 2017)

DOE Identifies 16 Federal Sites Across the Country for Data Center and AI Infrastructure Development

- US Department of Energy, 2025-04-03.

The race to build a digital god: How our fear of death drives the AI revolution

Australian Broadcasting Corporation: Uri Gal, 2025-07-07.

Grok: searching X for "from:elonmusk (Israel OR Palestine OR Hamas OR Gaza)"

Simon Willison's blog, 2025-07-11. Note: the title of this article indicates that the author discovered Grok AI was using special search prompts "from:elonmusk" to collect information from Elon Musk's own Tweets on Twitter in order to formulate the response the AI gives to end users.

Musk's AI Grok bot rants about 'white genocide' in South Africa in unrelated chats

The Guardian, Dara Kerr, 2025-05-15.

Bias Optimizers: how AI tools magnify humanity's worst qualities

American Scientist: Damien Patrick Williams, 2023 August.

Ethical Use of Training Data: Ensuring Fairness and Data Protection in AI

LAMARR Institute: Thomas Dethmann, Jannis Spiekermann, 2025-07-03.

Cory Doctorow, 2024-03-13.

Futurism: Frank Landymore, 2025-05-13.

AI models collapse when trained on recursively generated data

Nature: Ilia Shumailov, Zakhar Shumaylov, Yiren Zhao, Nicolas Papernot, Ross Anderson, Yarin Gal, 2025-07-24.

ChatGPT goes temporarily "insane" with unexpected outputs, spooking users

ArsTechnica: Benj Edwards, 2024-02-21.

'Life or Death:' AI-Generated Mushroom Foraging Books Are All Over Amazon

Samantha Cole, 2023-08-29.

The AI revolution is running out of data. What can researchers do?

Nature: Nicola Jones, 2024-12-11.

Anthropic study: Leading AI models show up to 96% blackmail rate against executives

VentureBeat: Michael Nuñez, 2025-06-23.

Microsoft Copilot joins ChatGPT at the feet of the mighty Atari 2600 Video Chess

The Register: Richard Speed, 2025-07-01.

Not Even Bronze: Evaluating LLMs on 2025 International Math Olympiad

Math Arena, 2025-07-19.

Toby Ord, 2025-06-16.

Karen Hao, Penguin Press (U.S.), 2025-05-20. ISBN 978-0593657508

"Lavender": The AI machine directing Israel's bombing spree in Gaza

972 Magazine: Yuval Abraham, 2024-04-07.

United Nations Office of the High Commissioner of Human Rights (UNOHCHR), press release, 2024-11-14.

Far-Right Israeli Lawmakers Demand 'Complete Cleansing' of Northern Gaza

Common Dreams: Brett Wilkins, 2025-01-03.

New York Magazine, Jack Herrera, 2025-06-02.

UK: Palestine Action ban 'disturbing' misuse of UK counter-terrorism legislation, Türk warns

United Nations Office of the High Commissioner of Human Rights, press release, 2025-07-25.

Sam Altman, blog post 2025-06-20 (retrieved 2025-07-28, via Archive.org).